News

Chris Frölich defends his thesis

Chris Frölich became the first PhD graduate of the FMLS group in JUly, successfully defending his wonderful thesis Imprecise Probabilities in Machine Learning: Structure and Semantics

FMLS hosts LuWSI

We are happy to announce that the FMLS group will host the 2nd Workshop on Learning Under Weakly Structured Information. On the 7th and 8th of April, topics, which usually fly below the radar of the fast-paced field of theoretical machine learning will be discussed. More info. Stay tuned!

Slow Science

Machine learning research is notoriously fast paced. Can one get off the treadmill and embrace a notion of slow science? I am pleased to be able to argue yes by example! Recently I have had two works published with a very long gestation.

The first Geometry and Calculus of Losses reports work that started in 2012 (not a typo). It presents a beautiful (yes it is!) geometric theory of loss functions, with all sorts of interesting connections to convex geometry and economics.

The second Information Processing Equalities and the Information-Risk Bridge builds upon the first and revisits what is arguably the most basic result in information theory, namely the "information processing inequality." It shows that in fact it can be written as an equality, albeit one with different measures of information on each side. It also presents a more general theory of the bridge between information and risk, showing these are generally two sides of the same coin. The earliest version of one of the main results was worked out in 2007 (again not a typo). It was published last week ... after some 17 years!

This work took a very long time largely because of external events (my work on Technology and Australia's Future which totally absorbed my efforts for 2.5 years), the absorption of NICTA into CSIRO (another several years, not at all pleasant), my move from Australia to Germany, and a serious health issue in 2022 (also no fun).

I am pleased to see both these works finally published. :-)

Bob

Bob's Inaugural Lecture

Systems of Precision - Intersectionality meets Measurability

The usual way probability theory is used is that you posit an algebra of events. Each such event has a probability (that is, it is "measurable"). The assumption that the set of events that have a probability is an algebra means that if A and B are both events then "A and B" is also an event (the intersection also has a probability). What happens if you do not make that assumption? In this paper (by Rabanus Derr and Bob Williamson) we provide an answer: you recover the theory of imprecise probability! This builds an intriguing bridge between measure theory and notions from social science such as intersectionality. One conclusion is that measurability should not be construed as a mere technical annoyance; rather, it is a crucial part of how you choose to model the world.

Paper on Calibration at FaCCT

Our paper on The Richness of Calibration has appeared in the proceedings of FaCCT 2023

Two new papers

We recently finished two papers on imprecise probabilities.

The set structure of precision: coherent probabilities on Pre-Dynkin Systems

This shows a relationship between the set system of measurable events and imprecision of probabilities, and thus offers a novel way of generalising traditional probabilities that is potentially useful for a range of problems, including modelling "intersectionality"

and

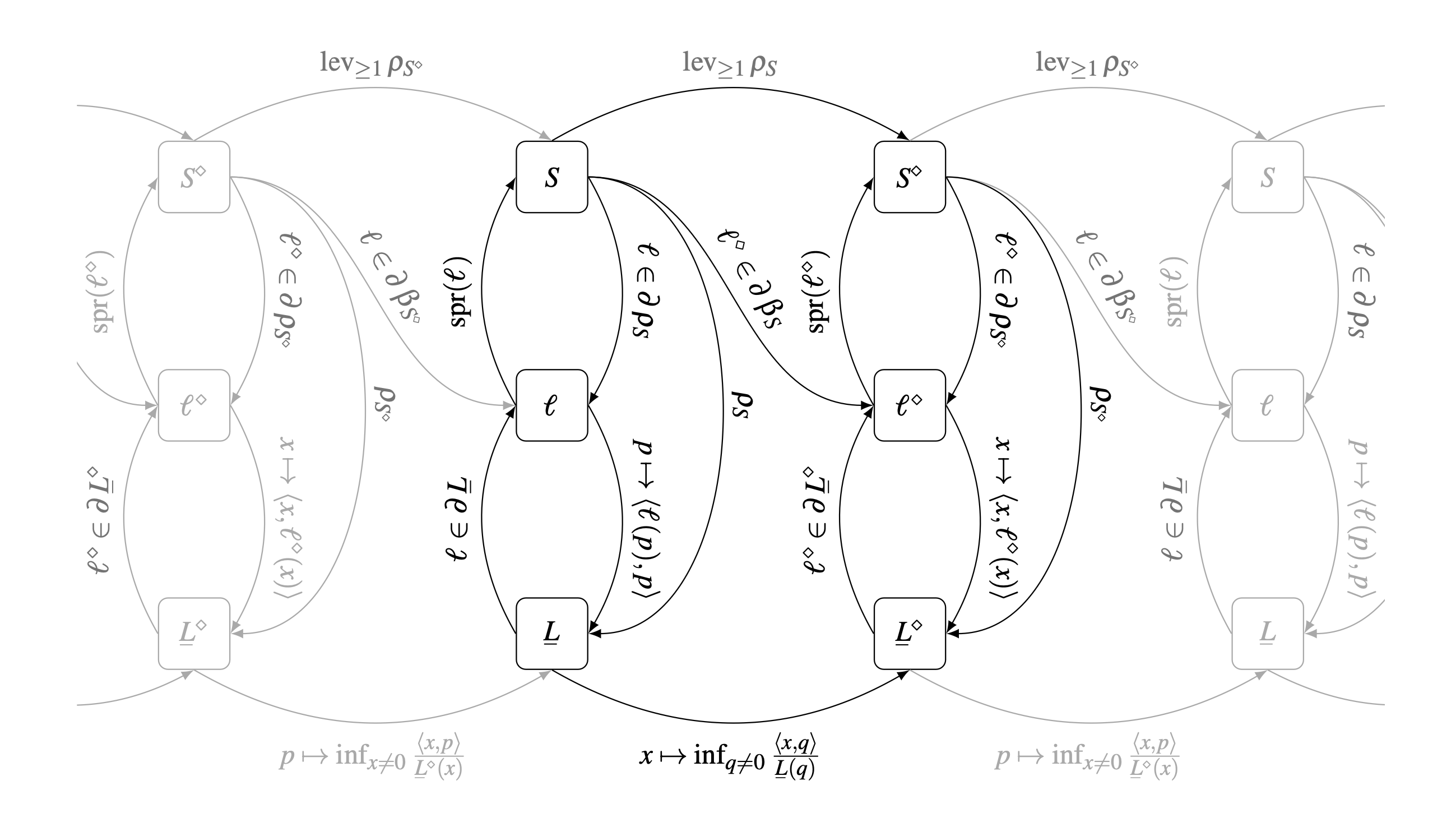

Strictly Frequentist Imprecise Probability

This shows that one can develop and strictly frequentist semantics for imprecise probabilities, whereby upper previsions arise from the set of cluster points of relative frequencies. This means the theory is applicable to all sequences, not just stochastic ones. We also present a converse result that suggests that the theory is the "right" thing in the sense that every upper prevision can be derived from a (non-stochastic sequence). The proof of this fact is constructive.